Load balance topologies¶

As mentioned in the Load balancing introduction page, there's a number of ways you can combine tor daemon instances to increase service reliability, based on either running instances in parallel or splitting the publisher node from the backends.

The following sections gives some examples.

Hardmaps¶

In the Onionspray (and EOTK) lingo, a hardmap is a Onion Service proxy

configuration which uses the tor daemon directly, i.e, every running tor daemon

acts both as the Onion Service descriptor publisher and backend.

In a project config file, a hardmap can be added as follows to map

example.org and all it's subdomains in the

exampd5xof3blvnynaqvmusnq3wx6c7mstcl6aph3yiw4fnt5yzqj3ad.onion Onion Service:

hardmap exampd5xof3blvnynaqvmusnq3wx6c7mstcl6aph3yiw4fnt5yzqj3ad.onion example.org

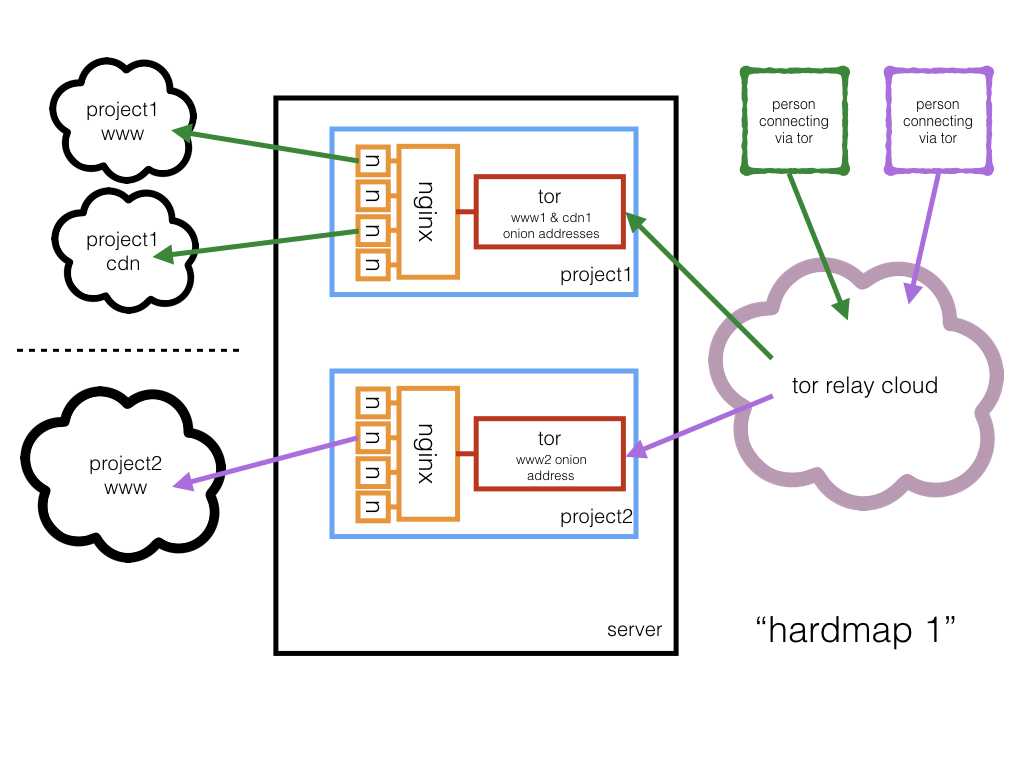

The diagram below shows a single server using Onionspray to host two different

projects (project1 and (project2), each one having it's own tor daemon

hosting it's own set of Onion Services:

So project1 could host Onion Services for example.org and project2 for

example.net, which tends to distribute the overall load of your Onionspray

setup between CPUs (say cpu1 serving sites from project1 and cpu2 taking

care of handling cpu2). The actual pattern may be a bit more complicated,

since each Onionspray project consists of a tor daemon process and multiple

process from a NGINX instance.

CPU-based load balancing

Note that both project1 and project2 could also be used to host

exactly the same Onion Services. If that's the case, then Onionspray would be

configured as the simplest case of a load balancing system, as each project tor

daemon instance could run in a different CPU. This may be useful to overcome C

Tor's single thread limitation, but may not be needed in a setup relying on

Arti (which is intended to support multi-threading natively).

CPU-based load balancing for a single service has limited effectiveness

Be aware that running the same Onion Service in multiple CPUs from the same machine is not very effective, since descriptors are not re-published very often, and you may end up having an alternating pattern of a single CPU being more used than the other on each publishing period.

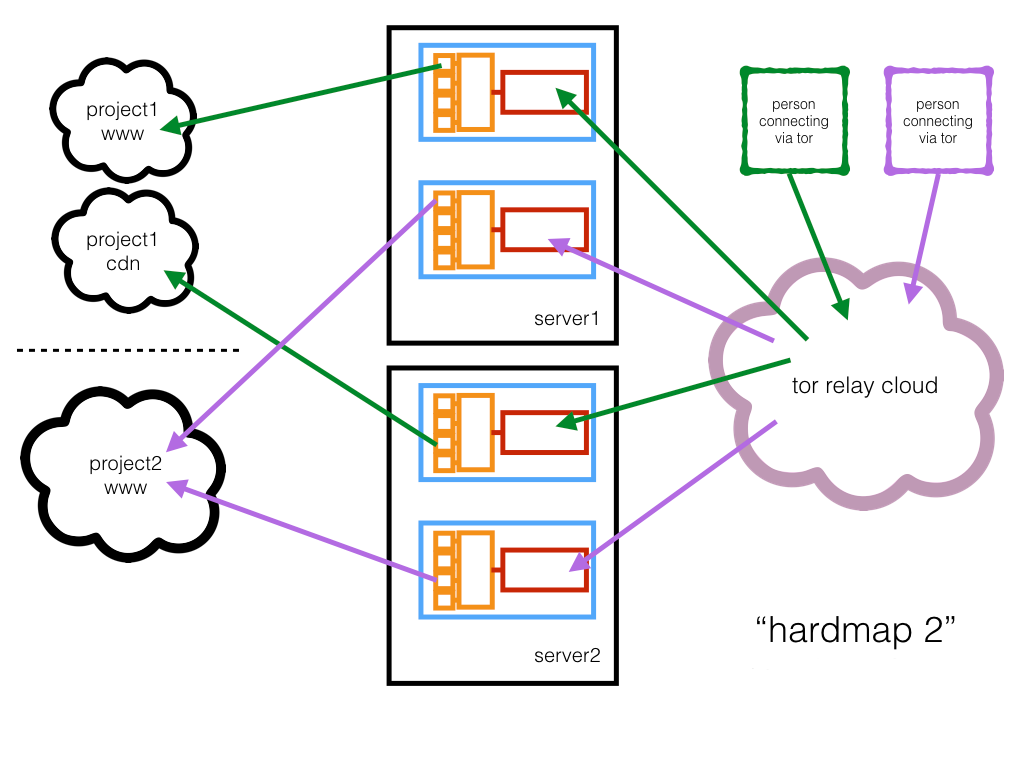

What can run in a single server can be replicated to another server, like the diagram below:

Here you can chose either to replicate Onion Services in multiple servers but can also replicate between multiple CPUs from the same server. At first, there's no limit in the number of servers or projects, as long as there are resources to accommodate the setup.

Softmaps¶

In Onionspray (and in EOTK), a softmap configuration uses

Onionbalance to load balance across backends.

Example configuration line managing example.org and all it's subdomains in the

exampd5xof3blvnynaqvmusnq3wx6c7mstcl6aph3yiw4fnt5yzqj3ad.onion Onion Service:

softmap exampd5xof3blvnynaqvmusnq3wx6c7mstcl6aph3yiw4fnt5yzqj3ad.onion example.org

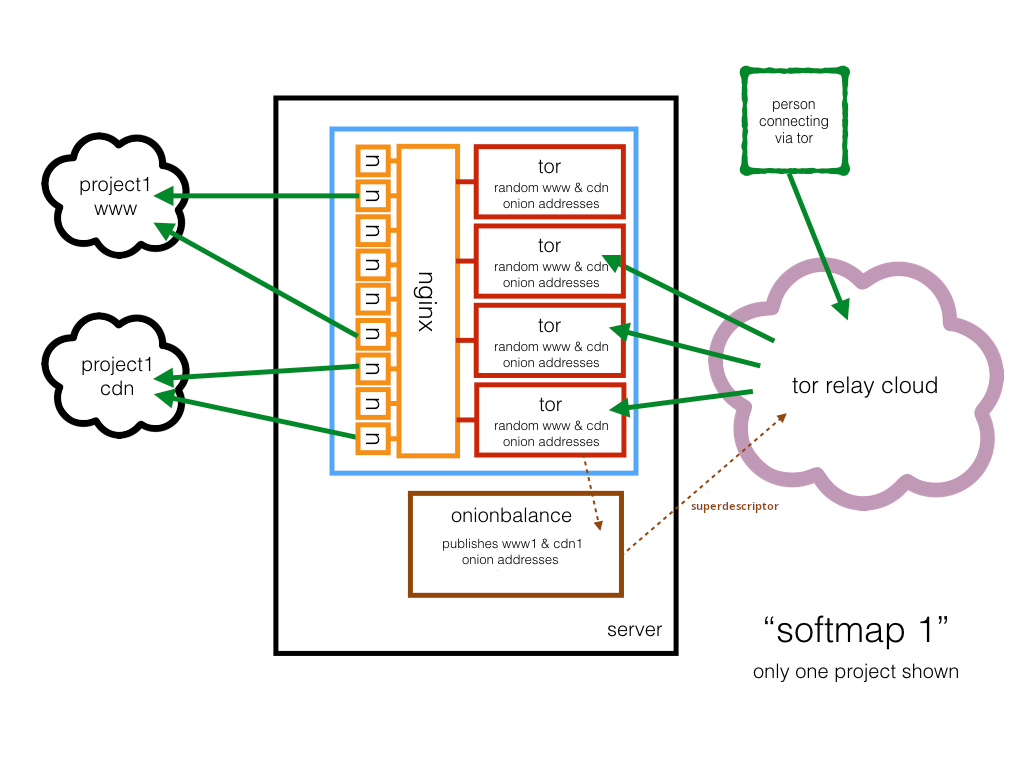

The next diagram shows a single server with a single Onionbalance publisher "frontend" managing the superdescriptor for Onion Services in all projects from an Onionspray installation (although for simplification only a single project is shown), achieving CPU load-balancing since the softmap is now handled by more than one tor daemon instance:

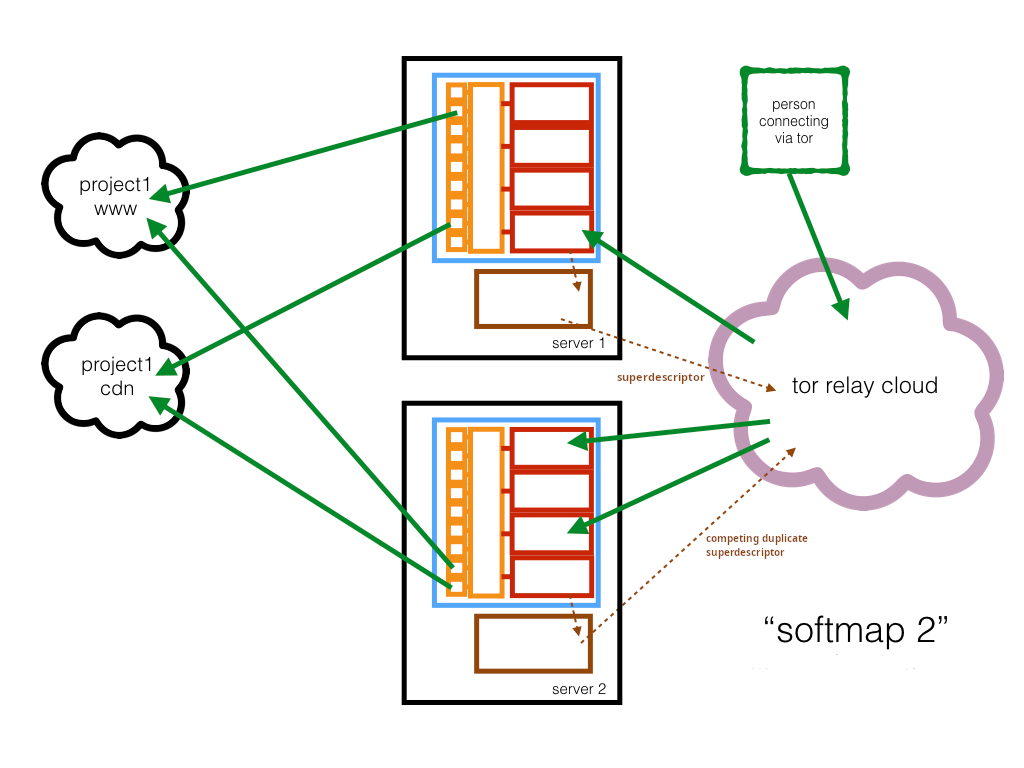

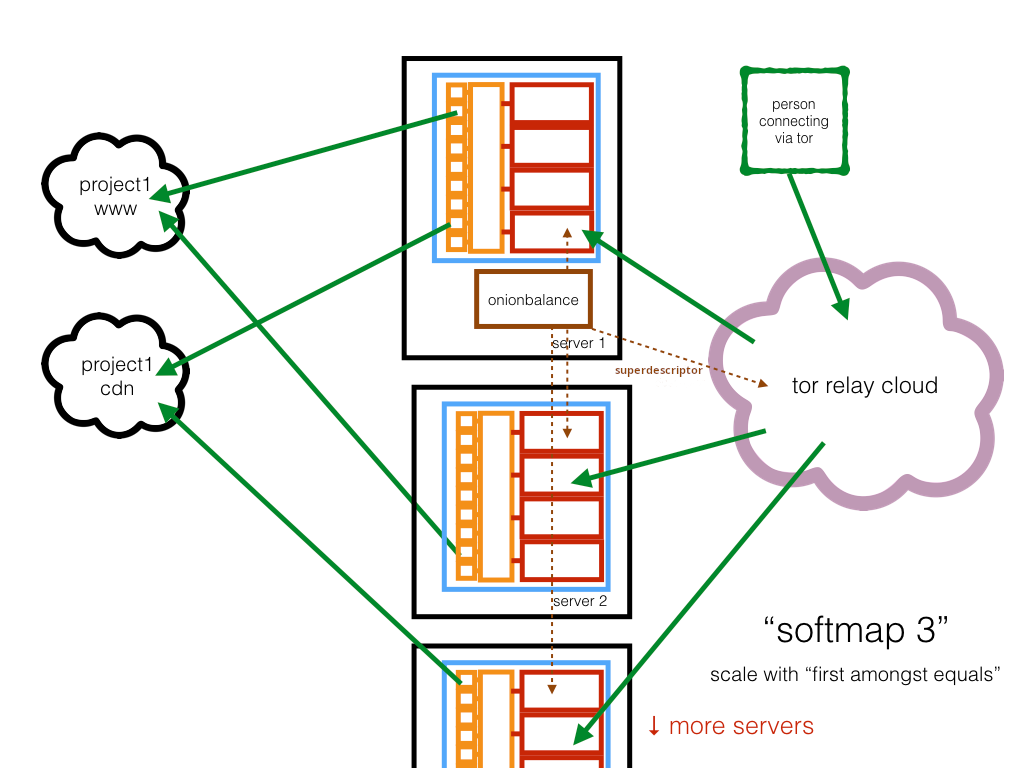

The setup above can be replicated. The next diagram shows a similar setup, but with two servers:

And the following diagram shows the setup with 3+ servers:

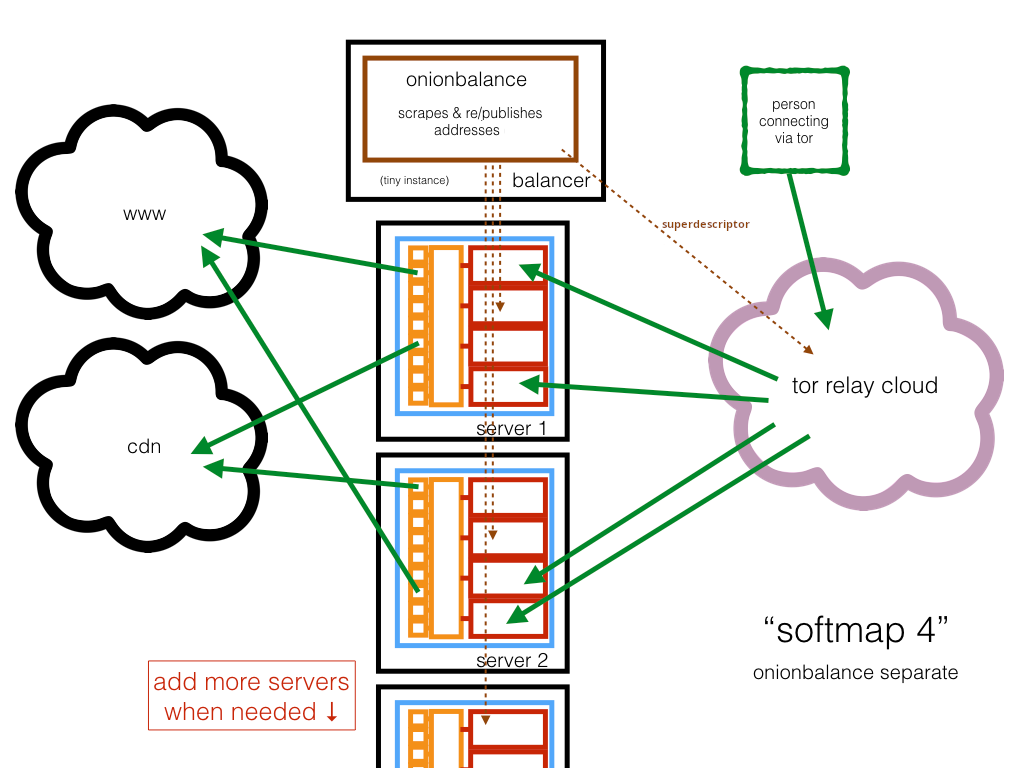

It's also possible to run the Onionbalance publisher in a separate, dedicated server:

Running publishers away from the backends have the additional advantage to isolate the Onion Service identity keys from the tor backends, giving an additional protection for the .onion addresses in case of any compromise in the backends such as from a security bug or operational vulnerability.

The diagram show only a single Onionbalance instance for all Onionspray servers, but you could also run more Onionbalance instances in parallel, to ensure there's no single point of failure in your setup.

This last example is covered on the Softmaps document.