Softmap guide¶

This is a guide for configuring load balancing in Onionspray using

the softmap approach.

Onionbalance installation

This guide assumes that you already have installed Onionbalance

either manually or as part of the installation process,

and it's available at your $PATH environment variable. So please check

if you have it already, and install otherwise.

Examples written for a Debian-like system

The examples in this guide were written assuming Debian-like Operating Systems, but you can adapt for you own distribution.

Basic setup¶

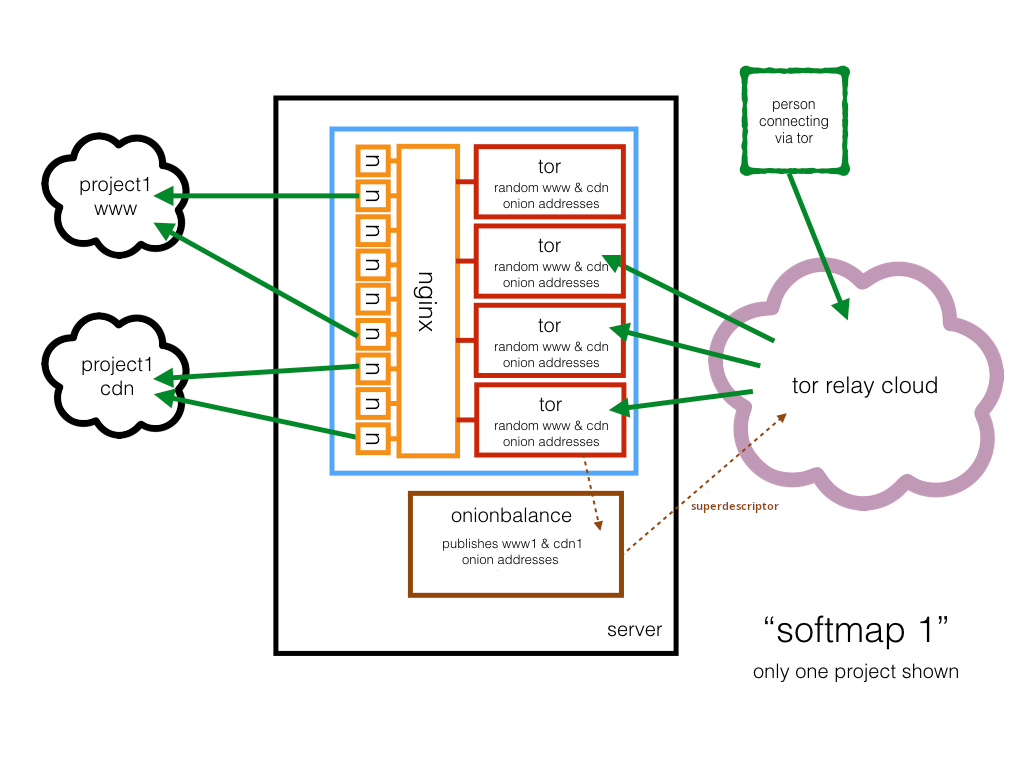

We'll start this guide by showing how to create the basic "softmap 1"

topology that load balances Onion Services by splitting load into

backend tor daemon instances that may run in distinct CPU cores from the same

server:

This will give a basic understanding about how Onionbalance integrates with Onionspray, so you can later proceed expanding your topology to multiple servers.

Start by setting up Onionspray:

sudo apt update

sudo apt install -y git

git clone https://gitlab.torproject.org/tpo/onion-services/onionspray.git

cd onionspray

./opt/build-debian-bookworm.sh

Now let's examine the contents of the example configuration for softmaps:

user@onionspray:/path/to/onionspray$ cat examples/softexample.tconf

# -*- conf -*-

# softmaps use onionbalance software to loadbalance across workers

set project softexample

softmap %NEW_V3_ONION% example.com

softmap %NEW_V3_ONION% torproject.org

softmap %NEW_V3_ONION% wikipedia.org

This will create three Onion Services, each one proxying content to distinct backend sites.

Let's use this template:

user@onionspray:/path/to/onionspray$ ./onionspray config examples/softexample.tconf

:::: configure examples/softexample.tconf ::::

onionspray: info: Processing examples/softexample.tconf

onionspray: info: Populating softexample.conf with onions

onionspray: info: Please be patient...

........

Signature Value:

Signature Value:

Signature Value:

mapping gnh7e65ffncwh3dluek7nxm3dfadkhqkbwoogkv6ascaj7ommf7t5syd.onion to example.com project softexample

mapping 3i6j7ncz57v7y2lxzozxmtgq7id5ppso2sw36puq7m7ziqbctbkdmfid.onion to torproject.org project softexample

mapping mubc36hz44omtoqxrxptc7ptcev3gb722qb6dxhx2hgigewqphsdirqd.onion to wikipedia.org project softexample

onionspray: done. logfile is log/configure7359.log

As expected, three .onion addresses were created. Since the mappings are

softmaps, the Onionbalance configuration was also created at the

onionbalance folder.

We can now start both Onionspray and Onionbalance:

user@onionspray:/path/to/onionspray$ ./onionspray start softexample

:::: start softexample ::::

user@onionspray:/path/to/onionspray$ ./onionspray ob-start -a

onionspray: gathering mappings for Onionbalance for projects: softexample

onionspray: project softexample contains 6 mappings

onionspray: project softexample uses 3 master onions

onionspray: project softexample uses 2 worker onions

onionspray: building Onionbalance configurations...

onionspray: building Onionbalance-Tor configurations...

onionspray: starting Onionbalance-Tor

onionspray: waiting 60 seconds for Onionbalance-Tor to bootstrap...

onionspray: starting Onionbalance

Checking the mappings for our project reveals something interesting:

user@onionspray:/path/to/onionspray$ ./onionspray maps -a

:::: maps softexample ::::

gnh7e65ffncwh3dluek7nxm3dfadkhqkbwoogkv6ascaj7ommf7t5syd.onion example.com softexample softmap via am6rt4fka2mvdxn3nipio5y4dv6k2l3z45imu2arlkzhq7il23twlayd.onion

3i6j7ncz57v7y2lxzozxmtgq7id5ppso2sw36puq7m7ziqbctbkdmfid.onion torproject.org softexample softmap via am6rt4fka2mvdxn3nipio5y4dv6k2l3z45imu2arlkzhq7il23twlayd.onion

mubc36hz44omtoqxrxptc7ptcev3gb722qb6dxhx2hgigewqphsdirqd.onion wikipedia.org softexample softmap via am6rt4fka2mvdxn3nipio5y4dv6k2l3z45imu2arlkzhq7il23twlayd.onion

gnh7e65ffncwh3dluek7nxm3dfadkhqkbwoogkv6ascaj7ommf7t5syd.onion example.com softexample softmap via u44b36kiok55k5ybjd624pg4n3ygwrfes2qpx7h65huxndenrvieziad.onion

3i6j7ncz57v7y2lxzozxmtgq7id5ppso2sw36puq7m7ziqbctbkdmfid.onion torproject.org softexample softmap via u44b36kiok55k5ybjd624pg4n3ygwrfes2qpx7h65huxndenrvieziad.onion

mubc36hz44omtoqxrxptc7ptcev3gb722qb6dxhx2hgigewqphsdirqd.onion wikipedia.org softexample softmap via u44b36kiok55k5ybjd624pg4n3ygwrfes2qpx7h65huxndenrvieziad.onion

Note that each site above is listed twice:

- The first time it's listed as being mapped via the

am6rt4fka2mvdxn3nipio5y4dv6k2l3z45imu2arlkzhq7il23twlayd.onionaddress. - The second time, via

u44b36kiok55k5ybjd624pg4n3ygwrfes2qpx7h65huxndenrvieziad.onion

These two are the backend .onion addresses, and they both listen for

connections from all sites in the softexample project. That means any client

accessing gnh7e65ffncwh3dluek7nxm3dfadkhqkbwoogkv6ascaj7ommf7t5syd.onion (the

generated .onion address for example.com) or any other "frontend" address

will be actually connecting to one of these two backends.

These backend .onion addresses are also disposable, meaning that you don't need to worry to backup them: users won't need to handle or bookmark these addresses. You just need to make sure to backup the keys for the main/frontend .onion address keys.

The list of frontend maps managed by Onionbalance can be retrieved by the following command:

user@onionspray:/path/to/onionspray$ ./onionspray ob-maps -a

gnh7e65ffncwh3dluek7nxm3dfadkhqkbwoogkv6ascaj7ommf7t5syd.onion example.com

3i6j7ncz57v7y2lxzozxmtgq7id5ppso2sw36puq7m7ziqbctbkdmfid.onion torproject.org

mubc36hz44omtoqxrxptc7ptcev3gb722qb6dxhx2hgigewqphsdirqd.onion wikipedia.org

Finally, let's check both Onionspray and Onionbalance status:

user@onionspray:/path/to/onionspray$ ./onionspray status -a

:::: onionbalance processes ::::

PID TTY STAT TIME COMMAND

7610 ? Sl 0:03 tor -f /path/to/onionspray/onionbalance/tor.conf

7614 pts/0 Sl 0:04 onionbalance

:::: status softexample ::::

PID TTY STAT TIME COMMAND

7546 ? Sl 0:03 tor -f /path/to/onionspray/projects/softexample/hs-1/tor.conf

7549 ? Sl 0:04 tor -f /path/to/onionspray/projects/softexample/hs-2/tor.conf

7556 ? Ss 0:00 nginx: master process nginx -c /path/to/onionspray/projects/softexample/nginx.conf

user@onionspray:/path/to/onionspray$ ./onionspray ob-status -a

:::: onionbalance processes ::::

PID TTY STAT TIME COMMAND

7610 ? Sl 0:03 tor -f /path/to/onionspray/onionbalance/tor.conf

7614 pts/0 Sl 0:04 onionbalance

user@onionspray:/path/to/onionspray$

Note that, by default, two tor daemon instances are running:

- The first using configuration from the

projects/softexample/hs-1/folder. - The second with configuration from the

projects/softexample/hs-2/folder.

Only a single Onionbalance instance is responsible for publishing descriptors for all frontend .onion addresses, and for all projects.

Shared backend instances

Onionspray was designed in a way that all oniosites in a project share

the same set of backend Onion Services from the same tor daemon backend

instances. This means that these Onion Services and tor daemon backends

will carry the combined load from all onionsistes from the project.

Load can be further distributed by splitting sites into different projects.

Complete setup¶

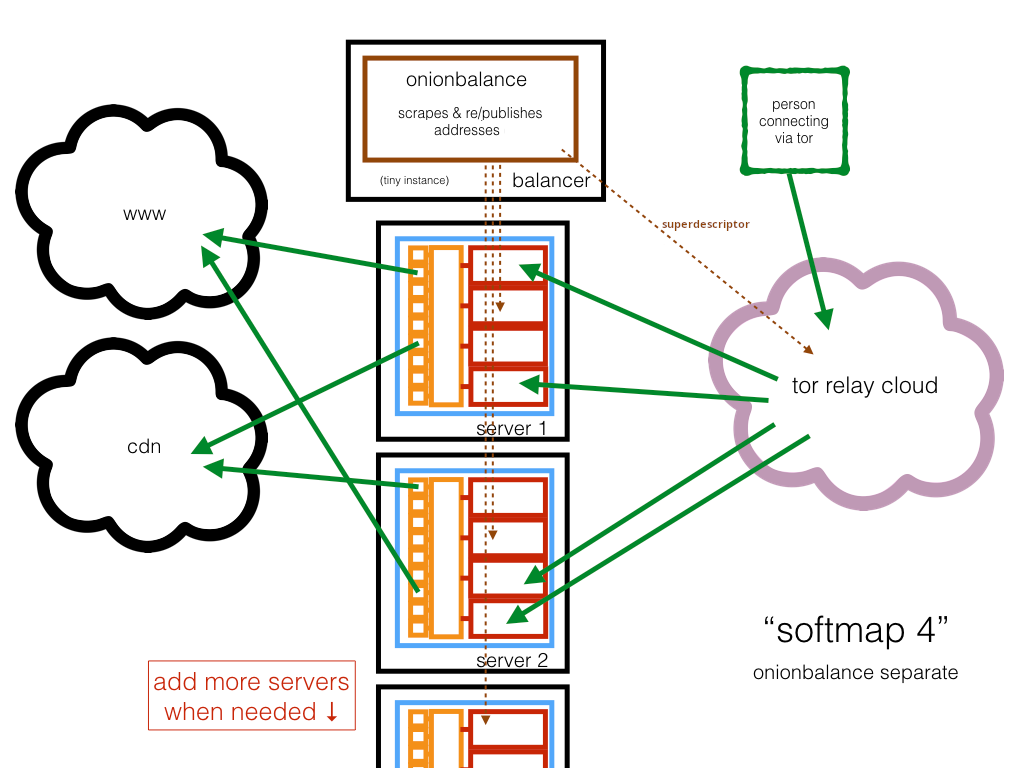

In the rest of this document, we are going to show how to build and maintain the "softmap 4" topology:

... using the example configuration for softmaps.

Systems¶

- Assume one publisher/controller/balancer instance (and that can also runs

worker instances):

- eg: micro instance AWS, named "Brenda (the Balancer)"

- Assume one or more worker instances:

- eg: medium/large instance AWS, named "Wolfgang (the Worker)"

The publisher/controller/balancer mainly runs Onionbalance and also controls

the work instances through SSH connections. It's also possible to have the controller

instance to have it's own worker instances, depending in the contents of the

onionspray-workers.conf file.

Installation process¶

Provision your Debian-like systems for both Brenda and Wolfgang, as you usually do. Example:

- Make sure to have automatic system upgrades enabled.

- Set up for inbound SSH / systems administration, etc.

- Set up (if not already) a non-root user for Onionspray purposes. You can even

use a pre-existing non-root account like

ubuntuorwww. - Follow the Onionspray installation instructions.

- Set up that Brenda can SSH to Wolfgang without passwords, as the non-root

user, and check that SSH works from brenda to wolfgang (

user@brenda$ ssh wolfgang uptime).

Configuration¶

These steps need to be done in a specific order:

Change directory into the onionspray installation directory, eg:

cd onionspray

Create a site.tconf file in the onionspray directory. You may use

the example configuration for softmaps for sample you can copy:

cp examples/softexample.tconf site.tconf # and edit to your needs

Do the following to create the onion keys and NGINX/Tor configuration files:

./onionspray config site.tconf

Review the configuration

You may want to take a moment to review the content of the generated

site.conf (note the new name) before progressing to the next step.

Delete or rename the template

You may want to delete or rename site.tconf (note old name) at this

point, to reduce confusion and/or risk of editing the wrong file in the future.

Do the following to tell Brenda about Wolfgang the Worker, by either running the following command or editing the file (one hostname per line):

echo wolfgang > onionspray-workers.conf

Do the following to push the configurations to the workers:

./onionspray ob-remote-nuke-and-push

Destructive push!

This is a destructive push. You do not want to run this command whilst

"live" workers are listed in onionspray-workers.conf, to do so would impact

user service in a bad (although recoverable) way.

Test and launch¶

Check that SSH works:

./onionspray ps

Start the workers. This creates worker onions-addresses which are essential for the following steps:

./onionspray start -a

Check the workers are running:

./onionspray status -a

Start Onionbalance This fetches the worker onion-addresses (via ssh) and

creates the Onionbalance configs, and launches the daemon:

onionspray ob-start -a

Check mappings and status:

onionspray ob-maps -a

onionspray ob-status -a

Launching at boot¶

Do this on all machines, because presumably you will want workers and the balancer to autostart:

./onionspray make-init-script

sudo cp onionspray-init.sh /etc/init.d/

sudo update-rc.d onionspray-init.sh defaults

Maintenance¶

Adding an extra worker ("William")¶

Stop Onionbalance; don't worry, your service will keep working for several hours via Wolfgang:

onionspray ob-stop # this only affects Brenda.

Rename the existing onionspray-workers.conf file.

mv onionspray-workers.conf onionspray-workers.conf.old

Create a new onionspray-workers.conf, containing the new workers:

echo william > onionspray-workers.conf

Feel free to edit/add more workers to this new file.

Push the configs to the new workers:

onionspray ob-remote-nuke-and-push

Destructive push

Remember that this command is destructive, hence the rename of the old

onionspray-workers.conf file, so that Wolfgang is not affected and keeps your

service up while you are doing this.

Start the new worker:

onionspray start -a

Check that the new workers are running:

onionspray status -a

Append the old workers to the new list:

cat onionspray-workers.conf,old >> onionspray-workers.conf

Feel free to go sort/edit/prune onionspray-workers.conf if you like.

Restart Onionbalance, using the expanded list of all workers:

onionspray ob-start -a

onionspray ob-maps -a

onionspray ob-status -a

Don't forget to configure Onionspray to "launch at boot" on William / the new servers, else sadness will result.

Removing a worker ("Wolfgang")¶

Now that we have Wolfgang and William running, perhaps we want to decommission Wolfgang?

Stop Onionbalance; don't worry, your service will keep working for several hours via William:

onionspray ob-stop # this only affects Brenda

Backup the existing onionspray-workers.conf file, in case of disaster:

cp onionspray-workers.conf onionspray-workers.conf.backup

Remove Wolfgang from onionspray-workers.conf by editing the file directly.

Check that the remaining workers are all still running:

onionspray status -a

Re-start onionbalance, using the reduced list of all workers:

onionspray ob-start -a

onionspray ob-maps -a # check this output to make sure Wolfgang is gone

onionspray ob-status -a

Wait until new descriptors are fully & recently pushed (see: ob-status -a).

Wait a little longer as a grace period.

Switch off Wolfgang.

I've made a minor tweak to my configuration...¶

Say you've edited a BLOCK regular expression, or changed the number of Tor

workers; you can push out a "spot" update to the configuration files at the

minor risk of breaking your entire service if you've made a mistake - so,

assuming that you don't make mistakes, do this:

onionspray config site.conf # on Brenda, to update the configurations

onionspray --local syntax # ignoring the workers, use NGINX locally to syntax-check the NGINX configs

onionspray ob-nxpush # replicate `nginx.conf` to the workers

onionspray nxreload

onionspray ob-torpush # replicate `tor.conf` to the workers

onionspray torreload

onionspray ps # check everything is still alive

onionspray status -a # check everything is still alive

onionspray ob-status -a` # check everything is still alive

Something's gone horribly wrong...¶

If you do make mistakes and have somehow managed to kill all your tor daemons,

all your NGINX daemons, or (amusingly) both, you should undo that change that

you made and then:

onionspray config site.conf # on Brenda, to update the configurations

onionspray --local syntax # ignoring the workers, use NGINX locally to syntax-check the NGINX configs

onionspray shutdown # turn everything off

onionspray ob-nxpush # replicate `nginx.conf` to the workers

onionspray ob-torpush # replicate `tor.conf` to the workers

onionspray start -a # start worker daemons

onionspray status -a # check worker daemons are running

onionspray ob-start -a # start onionbalance; your site will gradually start to come back up

onionspray ob-status -a # check status of descriptor propagation

In the worst-case scenario you might want to replace the ob-nxpush and

ob-torpush with a single ob-remote-nuke-and-push.

Wholesale update of Onionspray¶

Once you work out what's going on, and how Onionspray works, you'll see a bunch of ways to improve on this; however if you want to update your entire Onionspray setup to a new version the safest thing to do is to set up and test an entirely new balancer instance (Beatrice?) and entirely new workers (I am running out of W-names) that Beatrice manages.

The goal will be to take Beatrice's deployment to just-before the point

where Onionbalance is started, marked with a above. Then:

On Brenda, stop Onionbalance:

onionspray ob-stop # this only affects Brenda, stops her pushing new descriptors

On Beatrice, check that you've already started & checked Beatrice's workers, yes? If so, then start Onionbalance:

onionspray ob-start -a

On Beatrice, check mappings and status

onionspray ob-maps -a

onionspray ob-status -a

Wait for Beatrice's descriptors to propagate (...onionspray ob-status -a + few

minutes grace period) and if everything is okay, on Brenda you can do:

onionspray shutdown # which will further stop Onionspray on all of Brenda's workers

Leave Brenda and her workers lying around for a couple of days in case you detect problems and need to swap back; then purge.

Quicker update procedure¶

If you are a lower-risk site and don't want to go through all this change

control, and if you know enough about driving git to do your own bookmarking

and rollbacks, you can probably get away with this sort of very-dangerous,

very-inadvisable-for-novices, "nuke-it-from-orbit" trick on Brenda:

git pull # see warning immediately below

onionspray config site.conf` # generate fresh configurations

onionspray shutdown # your site will be down from this point

onionspray ob-remote-nuke-and-push # push the new configs, this will destroy worker key material

onionspray start -a # start worker daemons

onionspray status -a # check worker daemons are running

onionspray ob-start -a # start onionbalance; your site will gradually start to come back up

onionspray ob-status -a # check status of descriptor propagation

Onionspray changes

The Onionspray codebase may change rapidly. You might want to seek a stable

release bookmark or test a version locally before "diving-in" with a

randomly-timed git pull, else major breakage may result.

Backups¶

Assuming that there is enough disk space on Brenda, you might want to pull copies of all the configurations and logfiles on all the workers. To do that:

onionspray mirror # to build a local mirror of all worker Onionspray installations

onionspray backup # to make a compressed backup of a fresh mirror, for archiving

Tuning for small configurations¶

The value of tor_intros_per_daemon is set to 3 by default, in the

expectation that we will be using horizontal scaling (i.e.: multiple workers)

to gain performance, and that some of the workers may be down at any given

time.

Also Onionspray configures a semi-hardcoded number of tor daemons per worker, in

order to try and get a little more cryptographic multiprocessing out of Tor.

This value (softmap_tor_workers) is currently set to 2 and is probably

not helpful to change; the tor daemon itself is generally not a performance

bottleneck.

Overall, our theory is that if N=6 workers have M=2 tor daemons, each of

which has P=3 introduction points, then that provides a pool of N*M*P=36

introduction points for Onionbalance to scrape and attempt to synthesise into a

"production" descriptor for one of the public onions.

But if you are only using a single softmap worker then N=1 and so N*M*P

is 1*2*3=6, which is kinda small; in no way are 6 introduction points

inadequate for testing, but in production it does mean that basically all

circuit setups for any given onion will be negotiated through only 6 machines

on the internet at any given time; and that those 6 introduction points will be

servicing all connection-setups for all of the onion addresses that you

configure in a project. This could be substantial.

The current hard-limit cap for P / the number of introduction points in a

descriptor is 10, and Onionbalance uses a magic trick ("distinct

descriptors") to effectively multiply that number by 6, and so (in summary)

Onionspray can theoretically support 60 introduction points for any given softmap

Onion Address, which it constructs by scraping introduction points out of the

pool of worker onions.

But if you only have one worker, then by default Onionbalance only has 6 introduction points to work with.

In such circumstances I might suggest raising the value of P (i.e.:

tor_intros_per_daemon) to 8 or even 10 for single-worker configs, so that

(N*M*P=1*2*8=) 16 or more introduction points exist, so that Onionbalance has

a bit more material to work with; but a change like this is probably going to

be kinda "faffy" unless you are rebuilding from a clean slate. It may also lead

to temporary additional lag whilst the old introduction points are polled

when a worker has "gone down", if you restart workers frequently.

And/or/else, you could always add more workers to increase N.

But what if my pool of introduction points exceeds 60?!?¶

That's fine; Onionbalance randomly samples from within that pool, so that

(averaged over time) all of the introduction points will see some traffic;

but don't push it too far because that would be silly and wasteful to no

benefit. For existing v2 onion addresses (16 characters long) the optimal size

of N*M*P is probably "anywhere between 18-ish and 60-ish".

FAQ¶

What if I want localhost as part of the pool of workers?¶

- See the Softmap 3 diagram.

- This works; do

echo localhost >> onionspray-workers.conf:- If localhost/Brenda is the only machine, you don't really need a

onionspray-workers.conffile.

- If localhost/Brenda is the only machine, you don't really need a

- The string

localhostis treated specially by Onionspray, does not require ssh access. - Alternative: read the other documentation, use

hardmap, skip the need for Onionbalance.

Why install NGINX (and everything else) on brenda, too?¶

- Orthogonality: it means all machines are the same, easy of reuse/debugging.

- Architecture: you may want to use Brenda for testing/development via

hardmapdeployments. - Testing: you can use

onionspray [--local] syntax -ato sanity-check NGINX config files before pushing.

How many workers can I have?¶

- In the default Onionspray config, you may sensibly install up to 30 workers (Wolfgang, William, Walter, Westley...).

- This is because Tor descriptor space will max-out at 60 daemons, and

Onionspray launches 2x daemons per worker.

- This is somewhat tweakable if it becomes really necessary.

How many daemons will I get, by default?¶

- 2x

tordaemons per worker. This is configurable. NNGINX daemons per worker, where N = number of cores. This is configurable.

What are the ideal worker specifications?¶

- Probably machines with between 4 and 20 cores, with memory to match.

- Large, fast local

/tmpstorage, for caching. - Fast networking / good connectivity.

- Manually configure for

(num tor daemons) + (num NGINX daemons) == (num CPU cores), approximately.- You probably only need 1 or 2

tordaemons to provide enough traffic to any machine.

- You probably only need 1 or 2